SEO Spider Tabs

User Guide

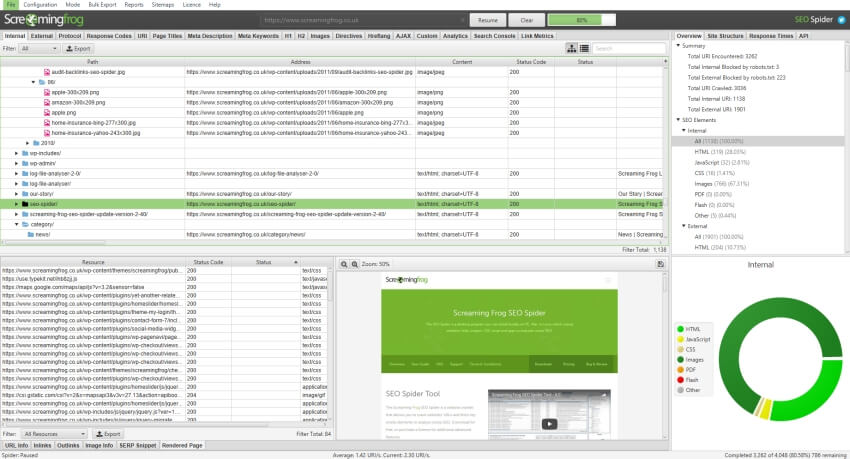

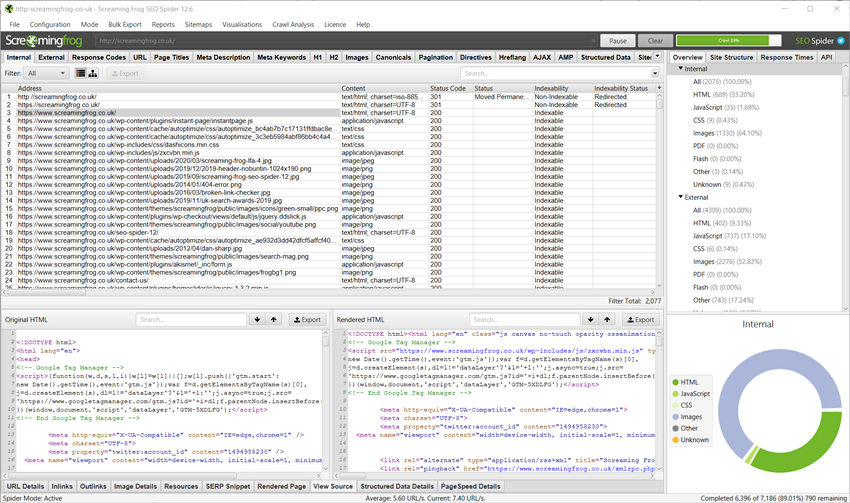

Internal

The Internal tab combines all data extracted from most other tabs, except the external, hreflang and structured data tabs. This means all data can be viewed comprehensively, and exported together for further analysis.

URLs classed as ‘Internal’ are on the same subdomain as the start page of the crawl. URLs can be made to be internal, by using the ‘crawl all subdomains‘ configuration, list mode, or the CDNs feature.

Columns

This tab includes the following columns.

- Address – The URL address.

- Content – The content type of the URL.

- Status Code – The HTTP response code.

- Status – The HTTP header response.

- Indexability – Whether the URL is Indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

- Title 1 – The (first) page title discovered on the page.

- Title 1 Length – The character length of the page title.

- Title 1 Pixel Width – The pixel width of the page title as described in our pixel width post.

- Meta Description 1 – The (first) meta description on the page.

- Meta Description Length 1 – The character length of the meta description.

- Meta Description Pixel Width – The pixel width of the meta description.

- Meta Keyword 1 – The meta keywords.

- Meta Keywords Length – The character length of the meta keywords.

- h1 – 1 – The first h1 (heading) on the page.

- h1 – Len-1 – The character length of the h1.

- h2 – 1 – The first h2 (heading) on the page.

- h2 – Len-1 – The character length of the h2.

- Meta Robots 1 – Meta robots directives found on the URL.

- X-Robots-Tag 1 – X-Robots-tag HTTP header directives for the URL.

- Meta Refresh 1 – Meta refresh data.

- Canonical Link Element – The canonical link element data.

- rel=“next” 1 – The SEO Spider collects these HTML link elements designed to indicate the relationship between URLs in a paginated series.

- rel=“prev” 1 – The SEO Spider collects these HTML link elements designed to indicate the relationship between URLs in a paginated series.

- HTTP rel=“next” 1 – The SEO Spider collects these HTTP link elements designed to indicate the relationship between URLs in a paginated series.

- HTTP rel=“prev” 1 – The SEO Spider collects these HTTP link elements designed to indicate the relationship between URLs in a paginated series.

- Size – The size of the resource, taken from the Content-Length HTTP header. If this field is not provided, the size is reported as zero. For HTML pages this is updated to the size of the (uncompressed) HTML. Upon export, size is in bytes, so please divide by 1,024 to convert to kilobytes.

- Transferred – The number of bytes that were actually transferred to load the resource, which might be less than the ‘size’ if compressed.

- Word Count – This is all ‘words’ inside the body tag, excluding HTML markup. The count is based upon the content area that can be adjusted under ‘Config > Content > Area’. By default, the nav and footer elements are excluded. You can include or exclude HTML elements, classes and IDs to calculate a refined word count. Our figures may not be exactly what performing this calculation manually would find, as the parser performs certain fix-ups on invalid HTML. Your rendering settings also affect what HTML is considered. Our definition of a word is taking the text and splitting it by spaces. No consideration is given to visibility of content (such as text inside a div set to hidden).

- Text Ratio – Number of non-HTML characters found in the HTML body tag on a page (the text), divided by the total number of characters the HTML page is made up of, and displayed as a percentage.

- Crawl Depth – Depth of the page from the start page (number of ‘clicks’ away from the start page). Please note, redirects are counted as a level currently in our page depth calculations.

- Folder Depth – Depth of the URL based upon the number of subfolders (/sub-folder/) in the URL path. This is not an SEO metric to optimise, but can be useful for segmentation, and advanced table search.

- Link Score – A metric between 0-100, which calculates the relative value of a page based upon its internal links similar to Google’s own PageRank. For this column to populate, ‘crawl analysis‘ is required.

- Inlinks – Number of internal hyperlinks to the URL. ‘Internal inlinks’ are links in anchor elements pointing to a given URL from the same subdomain that is being crawled.

- Unique Inlinks – Number of ‘unique’ internal inlinks to the URL. ‘Internal inlinks’ are links in anchor elements pointing to a given URL from the same subdomain that is being crawled. For example, if ‘page A’ links to ‘page B’ 3 times, this would be counted as 3 inlinks and 1 unique inlink to ‘page B’.

- Unique JS Inlinks – Number of ‘unique’ internal inlinks to the URL that are only in the rendered HTML after JavaScript execution. ‘Internal inlinks’ are links in anchor elements pointing to a given URL from the same subdomain that is being crawled. For example, if ‘page A’ links to ‘page B’ 3 times, this would be counted as 3 inlinks and 1 unique inlink to ‘page B’.

- % of Total – Percentage of unique internal inlinks (200 response HTML pages) to the URL from total internal HTML pages crawled. ‘Internal inlinks’ are links in anchor elements pointing to a given URI from the same subdomain that is being crawled.

- Outlinks – Number of internal outlinks from the URL. ‘Internal outlinks’ are links in anchor elements from a given URL to other URLs on the same subdomain that is being crawled.

- Unique Outlinks – Number of unique internal outlinks from the URL. ‘Internal outlinks’ are links in anchor elements from a given URL to other URLs on the same subdomain that is being crawled. For example, if ‘page A’ links to ‘page B’ on the same subdomain 3 times, this would be counted as 3 outlinks and 1 unique outlink to ‘page B’.

- Unique JS Outlinks – Number of unique internal outlinks from the URL that are only in the rendered HTML after JavaScript execution. ‘Internal outlinks’ are links in anchor elements from a given URL to other URLs on the same subdomain that is being crawled. For example, if ‘page A’ links to ‘page B’ on the same subdomain 3 times, this would be counted as 3 outlinks and 1 unique outlink to ‘page B’.

- External Outlinks – Number of external outlinks from the URL. ‘External outlinks’ are links in anchor elements from a given URL to another subdomain.

- Unique External Outlinks – Number of unique external outlinks from the URL. ‘External outlinks’ are links in anchor elements from a given URL to another subdomain. For example, if ‘page A’ links to ‘page B’ on a different subdomain 3 times, this would be counted as 3 external outlinks and 1 unique external outlink to ‘page B’.

- Unique External JS Outlinks – Number of unique external outlinks from the URL that are only in the rendered HTML after JavaScript execution. ‘External outlinks’ are links in anchor elements from a given URL to another subdomain. For example, if ‘page A’ links to ‘page B’ on a different subdomain 3 times, this would be counted as 3 external outlinks and 1 unique external outlink to ‘page B’.

- Closest Similarity Match – This shows the highest similarity percentage of a near duplicate URL. The SEO Spider will identify near duplicates with a 90% similarity match, which can be adjusted to find content with a lower similarity threshold. For example, if there were two near duplicate pages for a page with 99% and 90% similarity respectively, then 99% will be displayed here. To populate this column the ‘Enable Near Duplicates’ configuration must be selected via ‘Config > Content > Duplicates’, and post ‘Crawl Analysis’ must be performed. Only URLs with content over the selected similarity threshold will contain data, the others will remain blank. Thus by default, this column will only contain data for URLs with 90% or higher similarity, unless it has been adjusted via the ‘Config > Content > Duplicates’ and ‘Near Duplicate Similarity Threshold’ setting.

- No. Near Duplicates – The number of near duplicate URLs discovered in a crawl that meet or exceed the ‘Near Duplicate Similarity Threshold’, which is a 90% match by default. This setting can be adjusted under ‘Config > Content > Duplicates’. To populate this column the ‘Enable Near Duplicates’ configuration must be selected via ‘Config > Content > Duplicates’, and post ‘Crawl Analysis’ must be performed.

- Spelling Errors – The total number of spelling errors discovered for a URL. For this column to be populated then ‘Enable Spell Check’ must be selected via ‘Config > Content > Spelling & Grammar’.

- Grammar Errors – The total number of grammar errors discovered for a URL. For this column to be populated then ‘Enable Grammar Check’ must be selected via ‘Config > Content > Spelling & Grammar’.

- Language – The language selected for spelling and grammar checks. This is based upon the HTML language attribute, but the language can also be set via ‘Config > Content > Spelling & Grammar’.

- Hash – Hash value of the page using the MD5 algorithm. This is a duplicate content check for exact duplicate content only. If two hash values match, the pages are exactly the same in content. If there’s a single character difference, they will have unique hash values and not be detected as duplicate content. So this is not a check for near duplicate content. The exact duplicates can be seen under ‘URL > Duplicate’.

- Response Time – Time in seconds to download the URL. More detailed information can be found in our FAQ.

- Last-Modified – Read from the Last-Modified header in the servers HTTP response. If there server does not provide this the value will be empty.

- Redirect URI – If the ‘address’ URL redirects, this column will include the redirect URL target. The status code above will display the type of redirect, 301, 302 etc.

- Redirect Type – One of: HTTP Redirect: triggered by an HTTP header, HSTS Policy: Turned around locally by the SEO Spider due to a previous HSTS header, JavaScript Redirect: triggered by execution of JavaScript (can only happen when using JavaScript rendering) or MetaRefresh Redirect: triggered by a meta refresh tag in the HTML.

- HTTP Version – This shows the HTTP version the crawl was under, which will be HTTP/1.1 by default. The SEO Spider currently only crawls using HTTP/2 in JavaScript rendering mode, if it’s enabled by the server.

- URL Encoded Address – The URL actually requested by the SEO Spider. All non ASCII characters percent encoded, see RFC 3986 for further details.

- Title 2, meta description 2, h1-2, h2-2 etc – The SEO Spider will collect data from the first two elements it encounters in the source code. Hence, h1-2 is data from the second h1 heading on the page.

Filters

This tab includes the following filters.

- HTML – HTML pages.

- JavaScript – Any JavaScript files.

- CSS – Any style sheets discovered.

- Images – Any images.

- PDF – Any portable document files.

- Flash – Any .swf files.

- Other – Any other file types, like docs etc.

- Unknown – Any URLs with an unknown content type. Either because it’s not been supplied, incorrect, or because the URL can’t be crawled. URLs blocked by robots.txt will also appear here, as their filetype is unknown for example.

External

The external tab includes data about external URLs. URLs classed as ‘External’ are on a different subdomain as the start page of the crawl.

Columns

This tab includes the following columns.

- Address – The external URL address

- Content – The content type of the URL.

- Status Code – The HTTP response code.

- Status – The HTTP header response.

- Crawl Depth – Depth of the page from the homepage or start page (number of ‘clicks’ away from the start page).

- Inlinks – Number of links found pointing to the external URL.

Filters

This tab includes the following filters.

- HTML – HTML pages.

- JavaScript – Any JavaScript files.

- CSS – Any style sheets discovered.

- Images – Any images.

- PDF – Any portable document files.

- Flash – Any .swf files.

- Other – Any other file types, like docs etc.

- Unknown – Any URLs with an unknown content type. Either because it’s not been supplied, or because the URL can’t be crawled. URLs blocked by robots.txt will also appear here, as their filetype is unknown for example.

Security

The security tab shows data related to security for internal URLs in a crawl.

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Content – The content type of the URL.

- Status Code – HTTP response code.

- Status – The HTTP header response.

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

- Canonical Link Element 1/2 etc – Canonical link element data on the URL. The Spider will find all instances if there are multiple.

- Meta Robots 1/2 etc – Meta robots found on the URL. The Spider will find all instances if there are multiple.

- X-Robots-Tag 1/2 etc – X-Robots-tag data. The Spider will find all instances if there are multiple.

Filters

This tab includes the following filters.

- HTTP URLs – This filter will show insecure (HTTP) URLs. All websites should be secure over HTTPS today on the web. Not only is it important for security, but it’s now expected by users. Chrome and other browsers display a ‘Not Secure’ message against any URLs that are HTTP, or have mixed content issues (where they load insecure resources on them).

- HTTPS URLs – The secure version of HTTP. All internal URLs should be over HTTPS and therefore should appear under this filter.

- Mixed Content – This shows any HTML pages loaded over a secure HTTPS connection that have resources such as images, JavaScript or CSS that are loaded via an insecure HTTP connection. Mixed content weakens HTTPS, and makes the pages easier for eavesdropping and compromising otherwise secure pages. Browsers might automatically block the HTTP resources from loading, or they may attempt to upgrade them to HTTPS. All HTTP resources should be changed to HTTPS to avoid security issues, and problems loading in a browser.

- Form URL Insecure – An HTML page has a form on it with an action attribute URL that is insecure (HTTP). This means that any data entered into the form is not secure, as it could be viewed in transit. All URLs contained within forms across a website should be encrypted and therefore need to be HTTPS.

- Form on HTTP URL – This means a form is on an HTTP page. Any data entered into the form, including usernames and passwords is not secure. Chrome can display a ‘Not Secure’ message if it discovers a form with a password input field on an HTTP page.

- Unsafe Cross-Origin Links – URLs that link to external websites using the target=”_blank” attribute (to open in a new tab), without using rel=”noopener” (or rel=”noreferrer”) at the same time. Using target=”_blank” alone leaves those pages exposed to both security and performance issues for some legacy browsers, which are estimated to be below 5% of market share. Setting target=”_blank” on anchor elements implicitly provides the same rel behavior as setting rel=”noopener” which does not set window.opener for most modern browsers, such as Chrome, Safari, Firefox and Edge.

- Protocol-Relative Resource Links – This filter will show any pages that load resources such as images, JavaScript and CSS using protocol-relative links. A protocol-relative link is simply a link to a URL without specifying the scheme (for example, //screamingfrog.co.uk). It helps save developers time from having to specify the protocol and lets the browser determine it based upon the current connection to the resource. However, this technique is now an anti-pattern with HTTPS everywhere, and can expose some sites to ‘man in the middle’ compromises and performance issues.

- Missing HSTS Header – Any URLs that are missing the HSTS response header. The HTTP Strict-Transport-Security response header (HSTS) instructs browsers that it should only be accessed using HTTPS, rather than HTTP. If a website accepts a connection to HTTP, before being redirected to HTTPS, visitors will initially still communicate over HTTP. The HSTS header instructs the browser to never load over HTTP and to automatically convert all requests to HTTPS.

- Missing Content-Security-Policy Header – Any URLs that are missing the Content-Security-Policy response header. This header allows a website to control which resources are loaded for a page. This policy can help guard against cross-site scripting (XSS) attacks that exploit the browser’s trust of the content received from the server. The SEO Spider only checks for existence of the header, and does not interrogate the policies found within the header to determine whether they are well set-up for the website. This should be performed manually.

- Missing X-Content-Type-Options Header – Any URLs that are missing the ‘X-Content-Type-Options’ response header with a ‘nosniff’ value. In the absence of a MIME type, browsers may ‘sniff’ to guess the content type to interpret it correctly for users. However, this can be exploited by attackers who can try and load malicious code, such as JavaScript via an image they have compromised. To minimise these security issues, the X-Content-Type-Options response header should be supplied and set to ‘nosniff’. This instructs browsers to rely only on the Content-Type header and block anything that does not match accurately.

- Missing X-Frame-Options Header – Any URLs that are missing a X-Frame-Options response header with a ‘DENY’ or ‘SAMEORIGIN’ value. This instructs the browser not to render a page within a frame, iframe, embed or object. This helps avoid ‘click-jacking’ attacks, where your content is displayed on another web page that is controlled by an attacker.

- Missing Secure Referrer-Policy Header – Any URLs that are missing ‘no-referrer-when-downgrade’, ‘strict-origin-when-cross-origin’, ‘no-referrer’ or ‘strict-origin’ policies in the Referrer-Policy header. When using HTTPS, it’s important that the URLs do not leak in non-HTTPS requests. This can expose users to ‘man in the middle’ attacks, as anyone on the network can view them.

- Bad Content Type – This shows any URLs where the actual content type does not match the content type set in the header. It also identifies any invalid MIME types used. When the X-Content-Type-Options: nosniff response header is set by the server this is particularly important, as browsers rely on the content type header to correctly process the page. This can cause HTML web pages to be downloaded instead of being rendered when they are served with a MIME type other than text/html for example. Thus, all responses should have an accurate MIME type set in the content-type header.

To discover any HTTPS pages with insecure elements such as HTTP links, canonicals, pagination as well as mixed content (images, JS, CSS), we recommend using the ‘Insecure Content‘ report under the ‘Reports’ top level menu.

Response Codes

The response codes tab shows the HTTP status and status codes from internal and external URLs in a crawl. The filters group URLs by common response codes buckets.

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Content – The content type of the URL.

- Status Code – The HTTP response code.

- Status – The HTTP header response.

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

- Inlinks – Number of internal inlinks to the URL. ‘Internal inlinks’ are links pointing to a given URL from the same subdomain that is being crawled.

- Response Time – Time in seconds to download the URL. More detailed information in can be found in our FAQ.

- Redirect URL – If the address URL redirects, this column will include the redirect URL target. The status code above will display the type of redirect, 301, 302 etc.

- Redirect Type – One of the following; HTTP Redirect: triggered by an HTTP header, HSTS Policy: turned around locally by the SEO Spider due to a previous HSTS header, JavaScript Redirect: triggered by execution of JavaScript (which can only occur when using JavaScript rendering) or Meta Refresh Redirect: triggered by a meta refresh tag in the HTML of the page.

Filters

This tab includes the following filters for both Internal and External URLs.

- Blocked by Robots.txt – All URLs blocked by the site’s robots.txt. This means they cannot be crawled and is a critical issue if you want the page content to be crawled and indexed by search engines.

- Blocked Resource – All resources such as images, JavaScript and CSS that are blocked from being rendered for a page. This can be either by robots.txt, or due to an error loading the file. This filter will only populate when JavaScript rendering is enabled (blocked resources will appear under ‘Blocked by Robots.txt’ in default ‘text only’ crawl mode). This can be an issue as the search engines might not be able to access critical resources to be able to render pages accurately.

- No Response – When the URL does not send a response to the SEO Spiders HTTP request. Typically a malformed URL, connection timeout, connection refused or connection error. Malformed URLs should be updated and other connection issues can often be resolved by adjusting the SEO Spider configuration.

- Success (2XX) – The URL requested was received, understood, accepted and processed successfully. Ideally all URLs encountered in a crawl would be a status code ‘200’ with a ‘OK’ status, which is perfect for crawling and indexing of content.

- Redirection (3XX) – A redirection was encountered. These will include server-side redirects, such as 301 or 302 redirects. Ideally all internal links would be to canonical resolving URLs, and avoid linking to URLs that redirect. This reduces latency of redirect hops for users.

- Redirection (JavaScript) – A JavaScript redirect was encountered. Ideally all internal links would be to canonical resolving URLs, and avoid linking to URLs that redirect. This reduces latency of redirect hops for users.

- Redirection (Meta Refresh) – A meta refresh was encountered. Ideally all internal links would be to canonical resolving URLs, and avoid linking to URLs that redirect. This reduces latency of redirect hops for users.

- Redirect Chain – Internal URLs that redirect to another URL, which also then redirects. This can occur multiple times in a row, each redirect is referred to as a ‘hop’. Full redirect chains can be viewed and exported via ‘Reports > Redirects > Redirect Chains’.

- Redirect Loop – Internal URLs that redirect to another URL, which also then redirects. This can occur multiple times in a row, each redirect is referred to as a ‘hop’. This filter will only populate if a URL redirects to a previous URL within the redirect chain. Redirect chains with a loop can be viewed and exported via ‘Reports > Redirects > Redirect Chains’ with the ‘Loop’ column filtered to ‘True’.

- Client Error (4xx) – Indicates a problem occurred with the request. This can include responses such as 400 bad request, 403 Forbidden, 404 Page Not Found, 410 Removed, 429 Too Many Requests and more. All links on a website should ideally resolve to 200 ‘OK’ URLs. Errors such as 404s should be updated to their correct locations, removed and redirected where appropriate.

- Server Error (5XX) – The server failed to fulfil an apparently valid request. This can include common responses such as 500 Internal Sever Errors and 503 Server Unavailable. All URLs should respond with a 200 ‘OK’ status and this might indicate a server that struggles under load or a misconfiguration that requires investigation.

Please see our Learn SEO guide on HTTP Status Codes, or to troubleshoot responses when using the SEO Spider, read our HTTP Status Codes When Crawling tutorial.

URL

The URL tab shows data related to the URLs discovered in a crawl. The filters show common issues discovered for URLs.

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Content – The content type of the URL.

- Status Code – HTTP response code.

- Status – The HTTP header response.

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

- Hash – Hash value of the page. This is a duplicate content check. If two hash values match the pages are exactly the same in content.

- Length – The character length of the URL.

- Canonical 1 – The canonical link element data.

- URL Encoded Address – The URL actually requested by the SEO Spider. All non-ASCII characters percent encoded, see RFC 3986 for further details.

Filters

This tab includes the following filters.

- Non ASCII Characters – The URL has characters in it that are not included in the ASCII character-set. Standards outline that URLs can only be sent using the ASCII character-set and some users may have difficulty with subtleties of characters outside this range. URLs must be converted into a valid ASCII format, by encoding links to the URL with safe characters (made up of % followed by two hexadecimal digits). Today browsers and the search engines are largely able to transform URLs accurately.

- Underscores – The URL has underscores within it, which are not always seen as word separators by search engines. Hyphens are recommended for word separators.

- Uppercase – The URL has uppercase characters within it. URLs are case sensitive, so as best practice generally URLs should be lowercase, to avoid any potential mix ups and duplicate URLs.

- Multiple Slashes – The URL has multiple forward slashes in the path (for example, screamingfrog.co.uk/seo//). This is generally by mistake and as best practice URLs should only have a single slash between sections of a path to avoid any potential mix ups and duplicate URLs.

- Repetitive Path – The URL has a path that is repeated in the URL string (for example, screamingfrog.co.uk/services/seo/technical/seo/). In some cases this can be legitimate and logical, however it also often points to poor URL structure and potential improvements. It can also help identify issues with incorrect relative linking, causing infinite URLs.

- Contains A Space – The URL has a space in it. These are considered unsafe and could cause the link to be broken when sharing the URL. Hyphens should be used as word separators instead of spaces.

- Internal Search – The URL might be part of the websites internal search function. Google and other search engines recommend blocking internal search pages from being crawled. To avoid Google indexing the blocked internal search URLs, they should not be discoverable via internal links either.

- Parameters – The URL includes parameters such as ‘?’ or ‘&’ in it. This isn’t an issue for Google or other search engines to crawl, but it’s recommended to limit the number of parameters in a URL which can be complicated for users, and can be a sign of low value-add URLs.

- Broken Bookmark – URLs that have a broken bookmark (also known as ‘named anchors’, ‘jump links’, and ‘skip links’) that link users to a specific part of a webpage using an ID attribute in the HTML and append a fragment (#) and the ID name to the URL. When the link is clicked, the page will scroll to the location with the bookmark. While these links can be excellent for users, it’s easy to make mistakes in the set-up, and they often become ‘broken’ over time as pages are updated and IDs are changed or removed. A broken bookmark will mean the user is still taken to the correct page, but they won’t be directed to the intended section. While Google will see these URLs as the same page (as it ignores anything from the #), they can use named anchors for ‘jump to’ links in their search results for the page ranking. Please see our guide on how to find broken bookmarks.

- GA Tracking Parameters – URLs that contain Google Analytics tracking parameters. In addition to creating duplicate pages that must be crawled, using tracking parameters on links internally can overwrite the original session data. utm= parameters strip the original source of traffic and starts a new session with the specified attributes. _ga= and _gl= parameters are used for cross-domain linking and identify a specific user, including this on links prevents a unique user ID from being assigned.

- Over 115 characters – The URL is over 115 characters in length. This is not necessarily an issue, however research has shown that users prefer shorter, concise URL strings.

Please see our Learn SEO guide on URL Structure.

Page titles

The page title tab includes data related to page title elements of internal URLs in the crawl. The filters show common issues discovered for page titles.

The page title, often referred to as the ‘title tag’, ‘meta title’ or sometimes ‘SEO title’ is an HTML element in the head of a webpage that describes the purpose of the page to users and search engines. They are widely considered to be one of the strongest on-page ranking signals for a page.

The page title element should be placed in the head of the document and looks like this in HTML:

<title>This Is A Page Title</title>

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Occurrences – The number of page titles found on the page (the maximum the SEO Spider will find is 2).

- Title 1/2 – The content of the page title elements.

- Title 1/2 length – The character length of the page title(s).

- Indexability – Whether the URL is Indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if the URL is canonicalised to another URL, or has a ‘noindex’ etc.

Filters

This tab includes the following columns.

- Missing – Any pages which have a missing page title element, the content is empty or has a whitespace. Page titles are read and used by both users and the search engines to understand the purpose of a page. So it’s critical that pages have concise, descriptive and unique page titles.

- Duplicate – Any pages which have duplicate page titles. It’s really important to have distinct and unique page titles for every page. If every page has the same page title, then it can make it more challenging for users and the search engines to understand one page from another.

- Over 60 characters – Any pages which have page titles over 60 characters in length. Characters over this limit might be truncated in Google’s search results and carry less weight in scoring.

- Below 30 characters – Any pages which have page titles under 30 characters in length. This isn’t necessarily an issue, but you have more room to target additional keywords or communicate your USPs.

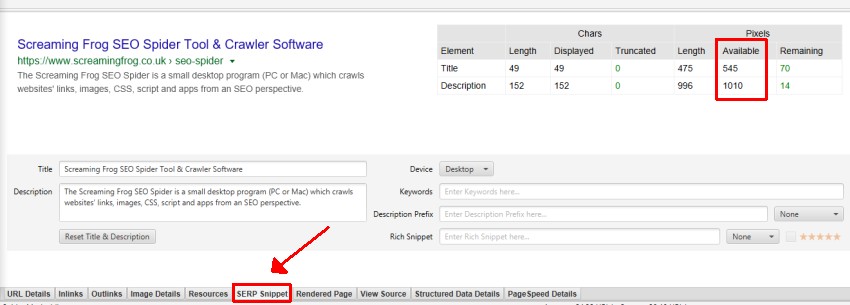

- Over X Pixels – Google snippet length is actually based upon pixels limits, rather than a character length. The SEO Spider tries to match the latest pixel truncation points in the SERPs, but it is an approximation and Google adjusts them frequently. This filter shows any pages which have page titles over X pixels in length.

- Below X Pixels – Any pages which have page titles under X pixels in length. This isn’t necessarily a bad thing, but you have more room to target additional keywords or communicate your USPs.

- Same as h1 – Any page titles which match the h1 on the page exactly. This is not necessarily an issue, but may point to a potential opportunity to target alternative keywords, synonyms, or related key phrases.

- Multiple – Any pages which have multiple page titles. There should only be a single page title element for a page. Multiple page titles are often caused by multiple conflicting plugins or modules in CMS.

- Outside <head> – Pages with a title element that is outside of the head element in the HTML. The page title should be within the head element, or search engines may ignore it. Google will often still recognise the page title even outside of the head element, however this should not be relied upon.

Please see our Learn SEO guide on writing Page Titles.

Meta description

The meta description tab includes data related to meta descriptions of internal URLs in the crawl. The filters show common issues discovered for meta descriptions.

The meta description is an HTML attribute in the head of a webpage that provides a summary of the page to users. The words in a description are not used in ranking by Google, but they can be shown in the search results to users, and therefore heavily influence click through rates.

The meta description should be placed in the head of the document and looks like this in HTML:

<meta name="description" content="This is a meta description."/>

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Occurrences – The number of meta descriptions found on the page (the maximum we find is 2).

- Meta Description 1/2 – The meta description.

- Meta Description 1/2 length – The character length of the meta description.

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if the URL is canonicalised to another URL.

Filters

This tab includes the following filters.

- Missing – Any pages which have a missing meta description, the content is empty or has a whitespace. This is a missed opportunity to communicate the benefits of your product or service and influence click through rates for important URLs.

- Duplicate – Any pages which have duplicate meta descriptions. It’s really important to have distinct and unique meta descriptions that communicate the benefits and purpose of each page. If they are duplicate or irrelevant, then they will be ignored by search engines.

- Over 155 characters – Any pages which have meta descriptions over 155 characters in length. Characters over this limit might be truncated in Google’s search results.

- Below 70 characters – Any pages which have meta descriptions below 70 characters in length. This isn’t strictly an issue, but an opportunity. There is additional room to communicate benefits, USPs or call to actions.

- Over X Pixels – Google snippet length is actually based upon pixels limits, rather than a character length. The SEO Spider tries to match the latest pixel truncation points in the SERPs, but it is an approximation and Google adjusts them frequently. This filter shows any pages which have descriptions over X pixels in length and might be truncated in Google’s search results.

- Below X Pixels – Any pages which have meta descriptions under X pixels in length. This isn’t strictly an issue, but an opportunity. There is additional room to communicate benefits, USPs or call to actions.

- Multiple – Any pages which have multiple meta descriptions. There should only be a single meta description for a page. Multiple meta descriptions are often caused by multiple conflicting plugins or modules in CMS.

- Outside <head> – Pages with a meta description that is outside of the head element in the HTML. The meta description should be within the head element, or search engines may ignore it.

Please see our Learn SEO guide on writing Meta Descriptions.

Meta keywords

The meta keywords tab includes data related to meta keywords. The filters show common issues discovered for meta keywords.

Meta keywords are widely ignored by search engines and they are not used as a signal in scoring for all major Western search engines. In particular Google does not consider it at all in their scoring of pages in ranking of their search results. Therefore we recommend ignoring it completely unless you are targeting alternative search engines.

Other search engines such as Yandex or Baidu may still use them in ranking, but we recommend performing research to this status before taking the time to optimise them.

The meta keywords tag should be placed in the head of the document and looks like this in HTML

:

<meta name="keywords" content="seo, seo agency, seo services"/>

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Occurrences – The number of meta keywords found on the page (the maximum we find is 2).

- Meta Keyword 1/2 – The meta keywords.

- Meta Keyword 1/2 length – The character length of the meta keywords.

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

Filters

This tab includes the following filters.

- Missing – Any pages which have a missing meta keywords. If you’re targeting Google, Bing and Yahoo then this is fine as they do not use them in ranking. If you’re targeting Baidu or Yandex, then you may wish to consider including relevant target keywords.

- Duplicate – Any pages which have duplicate meta keywords. If you’re targeting Baidu or Yandex, then unique keywords relevant to the purpose of the page are recommended.

- Multiple – Any pages which have multiple meta keywords. There should only be a single tag on the page.

h1

The h1 tab shows data related to the <h1> heading of a page. The filters show common issues discovered for <h1>s.

The <h1> to <h6> tags are used to define HTML headings. The <h1> is considered as the most important first main heading of a page, and <h6> as the least important.

Headings should ordered by size and importance and they help users and search engines understand the content on the page and sections. The <h1> should describe the main title and purpose of the page and are widely considered to be one of the stronger on-page ranking signals.

The <h1> element should be placed in the body of the document and looks like this in HTML:

<h1>This Is An h1</h1>

By default, the SEO Spider will only extract and report on the first two <h1>’s discovered on a page. If you wish to extract all h1s, then we recommend using custom extraction.

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Occurrences – The number of <h1>s found on the page. As outlined above, the maximum we find is 2.

- h1-1/2 – The content of the <h1>.

- h1-length-1/2 – The character length of the <h1>.

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

Filters

This tab includes the following filters.

- Missing – Any pages which have a missing <h1>, the content is empty or has a whitespace. <h1>’s are read and used by both users and the search engines to understand the purpose of a page. So it’s critical that pages have concise, descriptive and unique headings.

- Duplicate – Any pages which have duplicate <h1>s. It’s important to have distinct, unique and useful pages. If every page has the same <h1>, then it can make it more challenging for users and the search engines to understand one page from another.

- Over 70 characters – Any pages which have <h1> over 70 characters in length. This is not strictly an issue, as there isn’t a character limit for headings. However, they should be concise and descriptive for users and search engines.

- Multiple – Any pages which have multiple <h1>. While this is not strictly an issue because HTML5 standards allow multiple <h1>s on a page, there are some problems with this modern approach in terms of usability. It’s advised to use heading rank (h1–h6) to convey document structure. The classic HTML4 standard defines there should only be a single <h1> per page, and this is still generally recommended for users and SEO.

- Alt Text in h1 – Pages which have image alt text within an h1. This can be because text within the image is considered as the main heading on the page, or due to inappropriate mark-up. Some CMS templates will automatically include an h1 around a logo across a website. While there are strong arguments that text rather than alt text should be used for headings, search engines may understand alt text within an h1 as part of the h1 and score accordingly.

- Non-sequential – Pages with an h1 that is not the first heading on the page. Heading elements should be in a logical sequentially-descending order. The purpose of heading elements is to convey the structure of the page and they should be in logical order from h1 to h6, which helps navigating the page and users that rely on assistive technologies.

Please see our Learn SEO guide on Heading Tags.

h2

The h2 tab shows data related to the <h2> heading of a page. The filters show common issues discovered for <h2>s.

The <h1> to <h6> tags are used to define HTML headings. The <h2> is considered as the second important heading of a page and is generally sized and styled as the second largest heading.

The <h2> heading is often used to describe sections or topics within a document. They act as sign posts for the user, and can help search engines understand the page.

The <h2> element should be placed in the body of the document and looks like this in HTML:

<h2>This Is An h2</h2>

By default, the SEO Spider will only extract and report on the first two h2’s discovered on a page. If you wish to extract all h2s, then we recommend using custom extraction.

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Occurrences – The number of <h2>s found on the page. As outlined above, the maximum we find is 2.

- h2-1/2 – The content of the <h2>.

- h2-length-1/2 – The character length of the <h2>.

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

Filters

This tab includes the following filters.

- Missing – Any pages which have a missing <h2>, the content is empty or has a whitespace. <h2>’s are read and used by both users and the search engines to understand the page and sections. Ideally most pages would have logical, descriptive <h2>s.

- Duplicate – Any pages which have duplicate <h2>s. It’s important to have distinct, unique and useful pages. If every page has the same <h2>, then it can make it more challenging for users and the search engines to understand one page from another.

- Over 70 characters – Any pages which have <h2> over 70 characters in length. This is not strictly an issue, as there isn’t a character limit for headings. However, they should be concise and descriptive for users and search engines.

- Multiple – Any pages which have multiple <h2>s. This is not an issue as HTML standards allow multiple <h2>’s when used in a logical hierachical heading structure. However, this filter can help you quickly scan to review if they are used appropriately.

- Non-sequential – Pages with an h2 that is not the second heading level after the h1 on the page. Heading elements should be in a logical sequentially-descending order. The purpose of heading elements is to convey the structure of the page and they should be in logical order from h1 to h6, which helps navigating the page and users that rely on assistive technologies.

Please see our Learn SEO guide on Heading Tags.

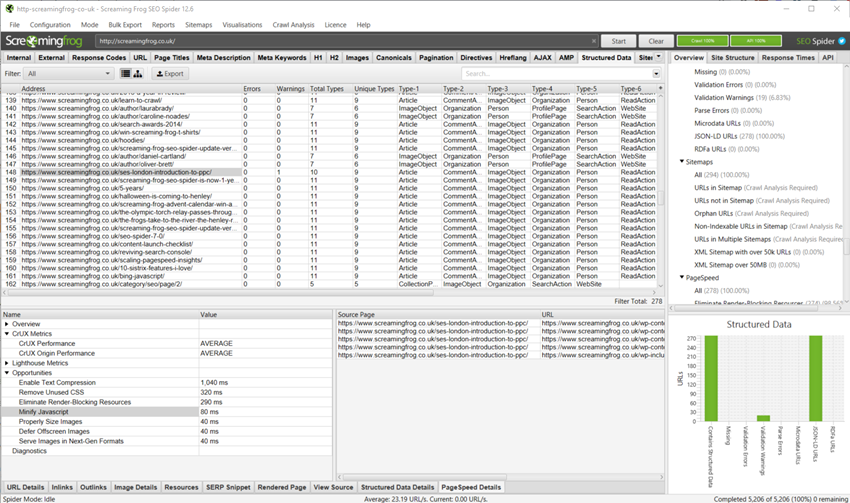

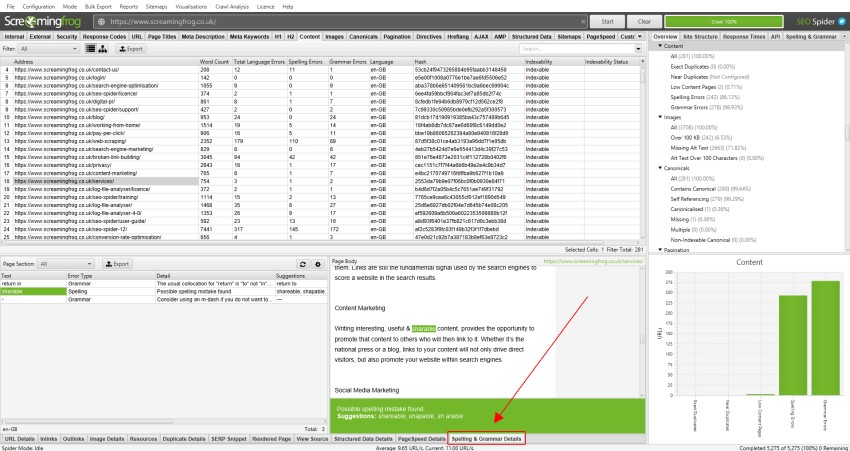

Content

The ‘Content’ tab shows data related to the content of internal HTML URLs discovered in a crawl.

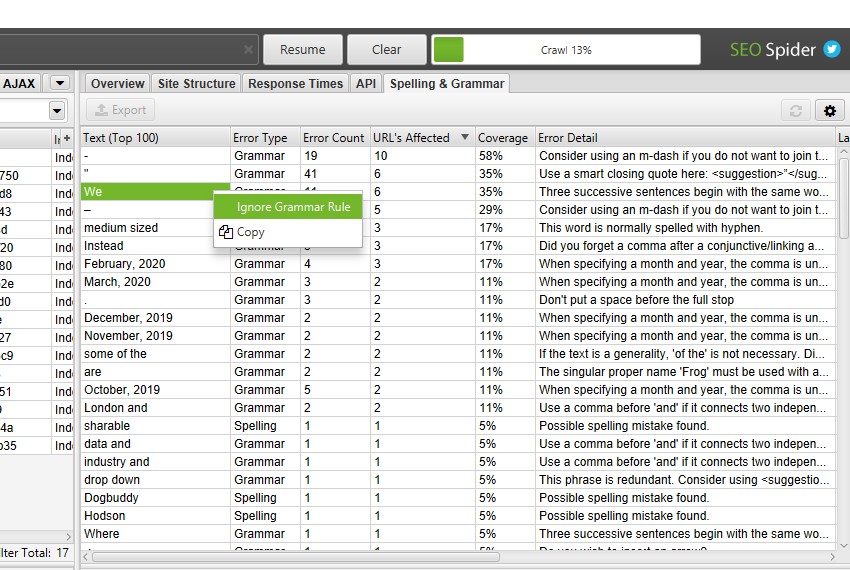

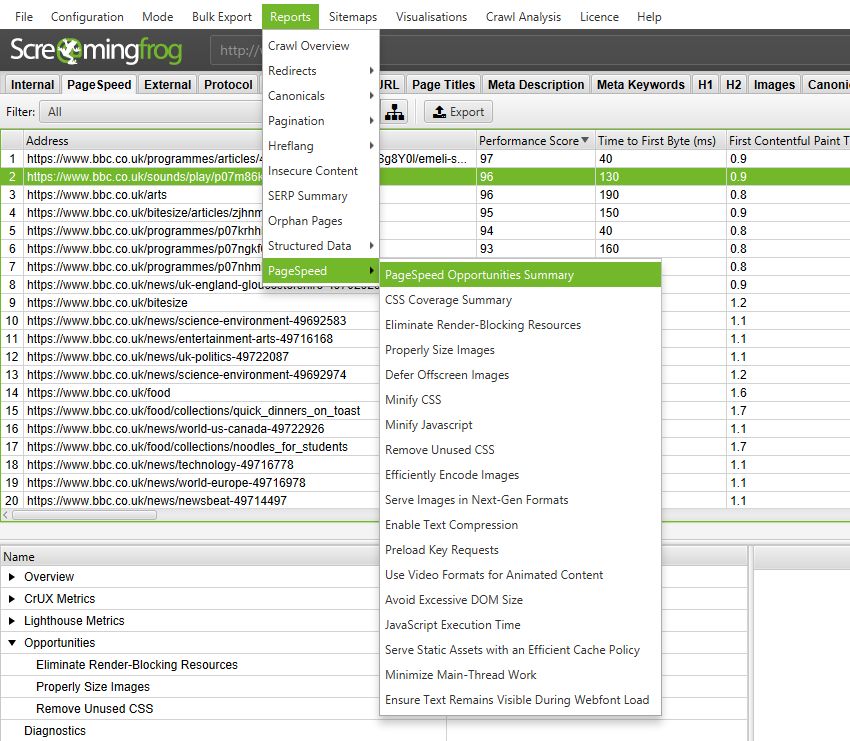

This includes word count, readability, duplicate and near duplicate content, and spelling and grammar errors.

Columns

This tab includes the following columns.

- Address – The URL address.

- Word Count – This is all ‘words’ inside the body tag, excluding HTML markup. The count is based upon the content area that can be adjusted under ‘Config > Content > Area’. By default, the nav and footer elements are excluded. You can include or exclude HTML elements, classes and IDs to calculate a refined word count. Our figures may not be exactly what performing this calculation manually would find, as the parser performs certain fix-ups on invalid HTML. Your rendering settings also affect what HTML is considered. Our definition of a word is taking the text and splitting it by spaces. No consideration is given to visibility of content (such as text inside a div set to hidden).

- Average Words Per Sentence – The total number of words from the content area, divided by the total number of sentences discovered. This is calculated as part of the Flesch readability analysis.

- Flesch Reading Ease Score – The Flesch reading ease test measures the readability of text. It’s a widely used readability formula, which uses the average length of sentences, and average number of syllables per word to provide a score between 0-100. 0 is very difficult to read and best understood by university graduates, while 100 is very easy to read and can be understood by an 11 year old student.

- Readability – The overall readability assessment classification based upon the Flesch Reading Ease Score and documented score groups.

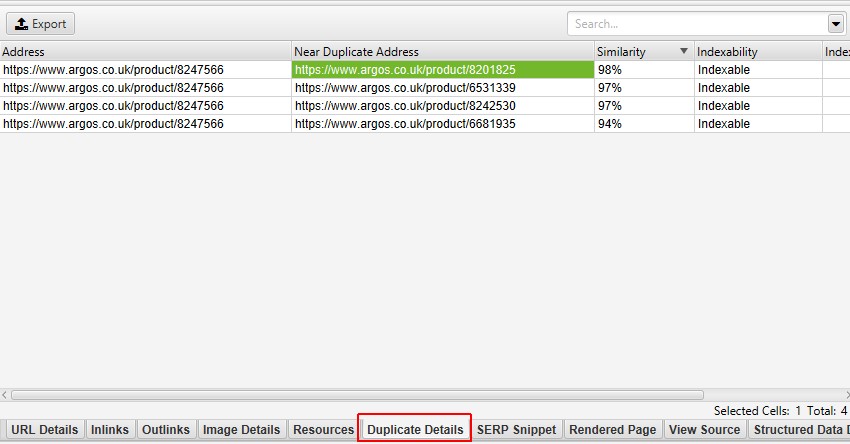

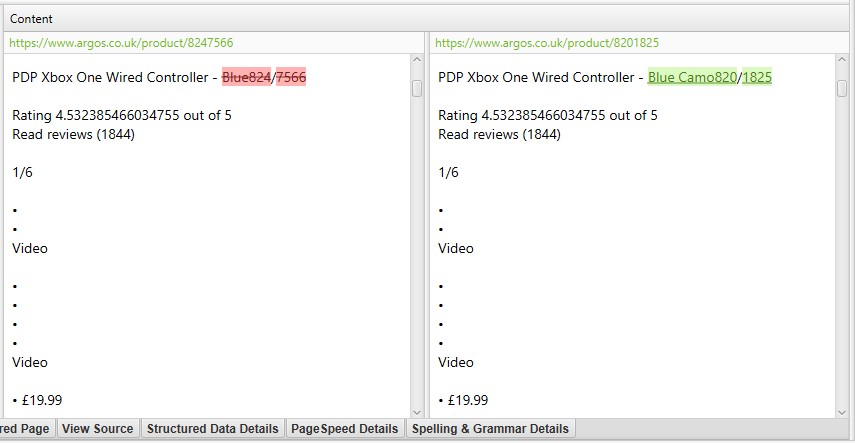

- Closest Similarity Match – This shows the highest similarity percentage of a near duplicate URL. The SEO Spider will identify near duplicates with a 90% similarity match, which can be adjusted to find content with a lower similarity threshold. For example, if there were two near duplicate pages for a page with 99% and 90% similarity respectively, then 99% will be displayed here. To populate this column the ‘Enable Near Duplicates’ configuration must be selected via ‘Config > Content > Duplicates‘, and post ‘Crawl Analysis‘ must be performed. Only URLs with content over the selected similarity threshold will contain data, the others will remain blank. Thus by default, this column will only contain data for URLs with 90% or higher similarity, unless it has been adjusted via the ‘Config > Content > Duplicates’ and ‘Near Duplicate Similarity Threshold’ setting.

- No. Near Duplicates – The number of near duplicate URLs discovered in a crawl that meet or exceed the ‘Near Duplicate Similarity Threshold’, which is a 90% match by default. This setting can be adjusted under ‘Config > Content > Duplicates’. To populate this column the ‘Enable Near Duplicates’ configuration must be selected via ‘Config > Content > Duplicates‘, and post ‘Crawl Analysis‘ must be performed.

- Total Language Errors – The total number of spelling and grammar errors discovered for a URL. For this column to be populated then either ‘Enable Spell Check’ or ‘Enable Grammar Check’ must be selected via ‘Config > Content > Spelling & Grammar‘.

- Spelling Errors – The total number of spelling errors discovered for a URL. For this column to be populated then ‘Enable Spell Check’ must be selected via ‘Config > Content > Spelling & Grammar‘.

- Grammar Errors – The total number of grammar errors discovered for a URL. For this column to be populated then ‘Enable Grammar Check’ must be selected via ‘Config > Content > Spelling & Grammar’.

- Language – The language selected for spelling and grammar checks. This is based upon the HTML language attribute, but the language can also be set via ‘Config > Content > Spelling & Grammar‘.

- Hash – Hash value of the page using the MD5 algorithm. This is a duplicate content check for exact duplicate content only. If two hash values match, the pages are exactly the same in content. If there’s a single character difference, they will have unique hash values and not be detected as duplicate content. So this is not a check for near duplicate content. The exact duplicates can be seen under ‘Content > Exact Duplicates’.

- Indexability – Whether the URL is Indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

Filters

This tab includes the following filters.

- Exact Duplicates – This filter will show pages that are identical to each other using the MD5 algorithm which calculates a ‘hash’ value for each page and can be seen in the ‘hash’ column. This check is performed against the full HTML of the page. It will show all pages with matching hash values that are exactly the same. Exact duplicate pages can lead to the splitting of PageRank signals and unpredictability in ranking. There should only be a single canonical version of a URL that exists and is linked to internally. Other versions should not be linked to, and they should be 301 redirected to the canonical version.

- Near Duplicates – This filter will show similar pages based upon the configured similarity threshold using the minhash algorithm. The threshold can be adjusted under ‘Config > Content > Duplicates’ and is set at 90% by default. The ‘Closest Similarity Match’ column displays the highest percentage of similarity to another page. The ‘No. Near Duplicates’ column displays the number of pages that are similar to the page based upon the similarity threshold. The algorithm is run against text on the page, rather than the full HTML like exact duplicates. The content used for this analysis can be configured under ‘Config > Content > Area’. Pages can have a 100% similarity, but only be a ‘near duplicate’ rather than exact duplicate. This is because exact duplicates are excluded as near duplicates, to avoid them being flagged twice. Similarity scores are also rounded, so 99.5% or higher will be displayed as 100%. To populate this column the ‘Enable Near Duplicates’ configuration must be selected via ‘Config > Content > Duplicates‘, and post ‘Crawl Analysis‘ must be performed.

- Low Content Pages – This will show any HTML pages with a word count below 200 words by default. The word count is based upon the content area settings used in the analysis which can be configured via ‘Config > Content > Area’. There isn’t a minimum word count for pages in reality, but the search engines do require descriptive text to understand the purpose of a page. This filter should only be used as a rough guide to help identify pages that might be improved by adding more descriptive content in the context of the website and page’s purpose. Some websites, such as ecommerce, will naturally have lower word counts, which can be acceptable if a products details can be communicated efficiently. The word count used for the low content pages filter can be adjusted via ‘Config > Spider > Preferences > Low Content Word Count‘ to your own preferences.

- Soft 404 Pages – Pages that respond with a ‘200’ status code suggesting they are ‘OK’, but appear to be an error page – often referred to as a ‘404’ or ‘page not found’. These typically should respond with a 404 status code if the page is no longer available. These pages are identified by looking for common error text used on pages, such as ‘Page Not Found’, or ‘404 Page Can’t Be Found’. The text used to identify these pages can be configured under ‘Config > Spider > Preferences’.

- Spelling Errors – This filter contains any HTML pages with spelling errors. For this filter and respective columns to be populated then ‘Enable Spell Check’ must be selected via ‘Config > Content > Spelling & Grammar‘.

- Grammar Errors – This filter contains any HTML pages with grammar errors. For this column to be populated then ‘Enable Grammar Check’ must be selected via ‘Config > Content > Spelling & Grammar‘.

- Readability Difficult – Copy on the page is difficult to read and best understood by college graduates according to the Flesch reading-ease score formula. Copy that has long sentences and uses complex words are generally harder to read and understand. Consider improving the readability of copy for your target audience. Copy that uses shorter sentences with less complex words is often easier to read and understand.

- Readability Very Difficult – Copy on the page is very difficult to read and best understood by university graduates according to the Flesch reading-ease score formula. Copy that has long sentences and uses complex words are generally harder to read and understand. Consider improving the readability of copy for your target audience. Copy that uses shorter sentences with less complex words is often easier to read and understand.

- Lorem Ipsum Placeholder – Pages that contain ‘Lorem ipsum’ text that is commonly used as a placeholder to demonstrate the visual form of a webpage. This can be left on web pages by mistake, particularly during new website builds.

Please see our Learn SEO guide on duplicate content, and our ‘How To Check For Duplicate Content‘ tutorial.

Images

The images tab shows data related to any images discovered in a crawl. This includes both internal and external images, discovered by either <img src= tags, or <a href= tags. The filters show common issues discovered for images and their alt text.

Image alt attributes (often referred to incorrectly as ‘alt tags’) can be viewed by clicking on an image and then the ‘Image Details’ tab at the bottom, which populates the lower window tab.

Alt attributes should specify relevant and descriptive alternative text about the purpose of an image and appear in the source of the HTML like the below example.

<img src="screamingfrog-logo.jpg" alt="Screaming Frog" />

Decorative images should provide a null (empty) alt text (alt=””) so that they can be ignored by assistive technologies, such as screen readers, rather than not including an alt attribute at all.

<img src="decorative-frog-space.jpg" alt="" />

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Content – The content type of the image (jpeg, gif, png etc).

- Size – Size of the image in kilobytes. File size is in bytes in the export, so divide by 1,024 to convert to kilobytes.

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

Filters

This tab includes the following filters.

- Over 100kb – Large images over 100kb in size. Page speed is extremely important for users and SEO and often large resources such as images are one of the most common issues that slow down web pages. This filter simply acts as a general rule of thumb to help identify images that are fairly large in file size and may take longer to load. These should be considered for optimisation, alongside opportunities identified in the PageSpeed tab which uses the PSI API and Lighthouse to audit speed. This can help identify images that haven’t been optimised in size, load offscreen, are unoptimised etc.

- Missing Alt Text – Images that have an alt attribute, but are missing alt text. Click the address (URL) of the image and then the ‘Image Details’ tab in the lower window pane to view which pages have the image on, and which pages are missing alt text of the said image. Images should have descriptive alternative text about it’s purpose, which helps the blind and visually impaired and the search engines understand it and it’s relevance to the web page. For decorative images a null (empty) alt text should be provided (alt=””) so that they can be ignored by assistive technologies, such as screen readers.

- Missing Alt Attribute – Images that are missing an alt attribute all together. Click the address (URL) of the image and then the ‘Image Details’ tab in the lower window pane to view which pages have the image on, and are missing alt attributes. All images should contain an alt attribute with descriptive text, or blank when it’s a decorative image.

- Alt Text Over 100 Characters – Images which have one instance of alt text over 100 characters in length. This is not strictly an issue, however image alt text should be concise and descriptive. It should not be used to stuff lots of keywords or paragraphs of text onto a page.

- Background Images – CSS background and dynamically loaded images discovered across the website, which should be used for non-critical and decorative purposes. Background images are not typically indexed by Google and browsers do not provide alt attributes or text on background images to assistive technology. For this filter to populate, JavaScript rendering must be enabled, and crawl analysis needs to be performed.

- Missing Size Attributes – Image elements without dimensions (width and height size attributes) specified in the HTML. This can cause large layout shifts as the page loads and be frustrating experience for users. It is one of the major reasons that contributes to a high Cumulative Layout Shift (CLS).

- Incorrectly Sized Images – Images identified where their real dimensions (WxH) do not match the display dimensions when rendered. If there is an estimated 4kb file size difference or more, the image is flagged for potential optimisation. In particular, this can help identify oversized images, which can contribute to poor page load speed. It can also help identify smaller sized images, that are being stretched when rendered. For this filter to populate, JavaScript rendering must be enabled, and crawl analysis needs to be performed.

For more on optimising images, please read our guide on How To View Alt Text & Find Missing Alt Text and consider using the the PageSpeed Insights Integration. This has opportunities and diagnostics for ‘Properly Size Images’, ‘Defer Offscreen Images’, ‘Efficiently Encode Images’, ‘Serve Images in Next-Gen Formats’ and ‘Image Elements Do Not Have Explicit Width & Height’.

Canonicals

The canonicals tab shows canonical link elements and HTTP canonicals discovered during a crawl. The filters show common issues discovered for canonicals.

The rel=”canonical” element helps specify a single preferred version of a page when it’s available via multiple URLs. It’s a hint to the search engines to help prevent duplicate content, by consolidating indexing and link properties to a single URL to use in ranking.

The canonical link element should be placed in the head of the document and looks like this in HTML:

<link rel="canonical" href="https://www.example.com/" >

You can also use rel=”canonical” HTTP headers, which looks like this:

Link: <http://www.example.com>; rel="canonical"

- Address – The URL crawled.

- Occurrences – The number of canonicals found (via both link element and HTTP).

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

- Canonical Link Element 1/2 etc – Canonical link element data on the URL. The SEO Spider will find all instances if there are multiple.

- HTTP Canonical 1/2 etc – Canonical issued via HTTP. The SEO Spider will find all instances if there are multiple.

- Meta Robots 1/2 etc – Meta robots found on the URL. The SEO Spider will find all instances if there are multiple.

- X-Robots-Tag 1/2 etc – X-Robots-tag data. The SEO Spider will find all instances if there are multiple.

- rel=“next” and rel=“prev” – The SEO Spider collects these HTML link elements designed to indicate the relationship between URLs in a paginated series.

Columns

This tab includes the following columns.

Filters

This tab includes the following filters.

- Contains Canonical – The page has a canonical URL set (either via link element, HTTP header or both). This could be a self-referencing canonical URL where the page URL is the same as the canonical URL, or it could be ‘canonicalised’, where the canonical URL is different to the page URL.

- Self Referencing – The URL has a canonical which is the same URL as the page URL crawled (hence, it’s self referencing). Ideally only canonical versions of URLs would be linked to internally, and every URL would have a self-referencing canonical to help avoid any potential duplicate content issues that can occur (even naturally on the web, such as tracking parameters on URLs, other websites incorrectly linking to a URL that resolves etc).

- Canonicalised – The page has a canonical URL that is different to itself. The URL is ‘canonicalised’ to another location. This means the search engines are being instructed to not index the page, and the indexing and linking properties should be consolidated to the target canonical URL. These URLs should be reviewed carefully. In a perfect world, a website wouldn’t need to canonicalise any URLs as only canonical versions would be linked to, but often they are required due to various circumstances outside of control, and to prevent duplicate content.

- Missing – There’s no canonical URL present either as a link element, or via HTTP header. If a page doesn’t indicate a canonical URL, Google will identify what they think is the best version or URL. This can lead to ranking unpredicatability, and hence generally all URLs should specify a canonical version.

- Multiple – There’s multiple canonicals set for a URL (either multiple link elements, HTTP header, or both combined). This can lead to unpredictability, as there should only be a single canonical URL set by a single implementation (link element, or HTTP header) for a page.

- Multiple Conflicting – Pages with multiple canonicals set for a URL that have different URLs specified (via either multiple link elements, HTTP header, or both combined). This can lead to unpredictability, as there should only be a single canonical URL set by a single implementation (link element, or HTTP header) for a page.

- Non-Indexable Canonical – The canonical URL is a non-indexable page. This will include canonicals which are blocked by robots.txt, no response, redirect (3XX), client error (4XX), server error (5XX) or are ‘noindex’. Canonical versions of URLs should always be indexable, ‘200’ response pages. Therefore, canonicals that go to non-indexable pages should be corrected to the resolving indexable versions.

- Canonical Is Relative – Pages that have a relative rather than absolute rel=”canonical” link tag. While the tag, like many HTML tags, accepts both relative and absolute URLs, it’s easy to make subtle mistakes with relative paths that could cause indexing-related issues.

- Unlinked – URLs that are only discoverable via rel=”canonical” and are not linked-to via hyperlinks on the website. This might be a sign of a problem with internal linking, or the URLs contained in the canonical.

- Outside <head> – Pages with a canonical link element that is outside of the head element in the HTML. The canonical link element should be within the head element, or search engines will ignore it.

Please see our Learn SEO guide on canonicals, and our ‘How to Audit Canoncials‘ tutorial.

Pagination

The pagination tab includes information on rel=”next” and rel=”prev” HTML link elements discovered in a crawl, which are used to indicate the relationship between component URLs in a paginated series. The filters show common issues discovered for pagination.

While Google announced on the 21st of March 2019 that they have not used rel=”next” and rel=”prev” in indexing for a long time, other search engines such as Bing (which also powers Yahoo), still use it as a hint for discovery and understanding site structure.

Pagination attributes should be placed in the head of the document and looks like this in HTML:

<link rel="prev" href="https://www.example.com/seo/"/>

<link rel="next" href="https://www.example.com/seo/page/2/"/>

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Occurrences – The number of canonicals found (via both link element and HTTP).

- Indexability – Whether the URL is indexable or Non-Indexable.

- Indexability Status – The reason why a URL is Non-Indexable. For example, if it’s canonicalised to another URL.

- rel=“next” – The SEO Spider collects these HTML link elements designed to indicate the relationship between URLs in a paginated series.

- rel=“prev” – The SEO Spider collects these HTML link elements designed to indicate the relationship between URLs in a paginated series.

- Canonical Link Element 1/2 etc – Canonical link element data on the URI. The SEO Spider will find all instances if there are multiple.

- HTTP Canonical 1/2 etc – Canonical issued via HTTP. The SEO Spider will find all instances if there are multiple.

- Meta Robots 1/2 etc – Meta robots found on the URI. The SEO Spider will find all instances if there are multiple.

- X-Robots-Tag 1/2 etc – X-Robots-tag data. The SEO Spider will find all instances if there are multiple.

Filters

This tab includes the following filters.

- Contains Pagination – The URL has a rel=”next” and/or rel=”prev” attribute, indicating it’s part of a paginated series.

- First Page – The URL only has a rel=“next” attribute, indicating it’s the first page in a paginated series. It’s easy and useful to scroll through these URLs and ensure they are accurately implemented on the parent page in the series.

- Paginated 2+ Pages – The URL has a rel=“prev” on it, indicating it’s not the first page, but a paginated page in a series. Again, it’s useful to scroll through these URLs and ensure only paginated pages appear under this filter.

- Pagination URL Not In Anchor Tag – A URL contained in either, or both, the rel=”next” and rel=”prev” attributes of the page, are not found as a hyperlink in an HTML anchor element on the page itself. Paginated pages should be linked to with regular links to allow users to click and navigate to the next page in the series. They also allow Google to crawl from page to page, and PageRank to flow between pages in the series. Google’s own Webmaster Trends analyst John Mueller recommended proper HTML links for pagination as well in a Google Webmaster Central Hangout.

- Non-200 Pagination URL – The URLs contained in the rel=”next” and rel=”prev” attributes do not respond with a 200 ‘OK’ status code. This can include URLs blocked by robots.txt, no responses, 3XX (redirects), 4XX (client errors) or 5XX (server errors). Pagination URLs must be crawlable and indexable and therefore non-200 URLs are treated as errors, and ignored by the search engines. The non-200 pagination URLs can be exported in bulk via the ‘Reports > Pagination > Non-200 Pagination URLs’ export.

- Unlinked Pagination URL – The URL contained in the rel=”next” and rel=”prev” attributes are not linked to across the website. Pagination attributes may not pass PageRank like a traditional anchor element, so this might be a sign of a problem with internal linking, or the URLs contained in the pagination attribute. The unlinked pagination URLs can be exported in bulk via the ‘Reports > Pagination > Unlinked Pagination URLs’ export.

- Non-Indexable – The paginated URL is non-indexable. Generally they should all be indexable, unless there is a ‘view-all’ page set, or there are extra parameters on pagination URLs, and they require canonicalising to a single URL. One of the most common mistakes is canonicalising page 2+ paginated pages to the first page in a series. Google recommend against this implementation because the component pages don’t actually contain duplicate content. Another common mistake is using ‘noindex’, which can mean Google drops paginated URLs from the index completely and stops following outlinks from those pages, which can be a problem for the products on those pages. This filter will help identify these common set-up issues.

- Multiple Pagination URLs – There are multiple rel=”next” and rel=”prev” attributes on the page (when there shouldn’t be more than a single rel=”next” or rel=”prev” attribute). This may mean that they are ignored by the search engines.

- Pagination Loop – This will show URLs that have rel=”next” and rel=”prev” attributes that loop back to a previously encountered URL. Again, this might mean that the expressed pagination series are simply ignored by the search engines.

- Sequence Error – This shows URLs that have an error in the rel=”next” and rel=”prev” HTML link elements sequence. This check ensures that URLs contained within rel=”next” and rel=”prev” HTML link elements reciprocate and confirm their relationship in the series.

For more information on pagination, please read our guide on ‘How To Audit rel=”next” and rel=”prev” Pagination Attributes‘.

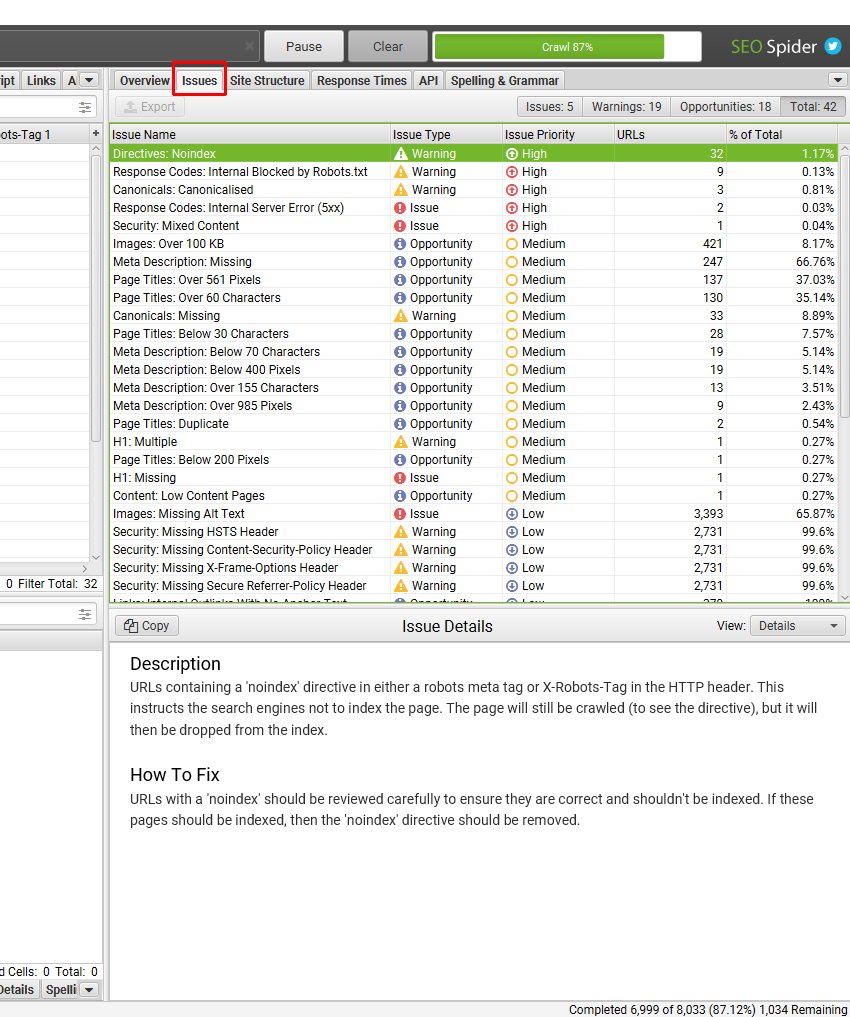

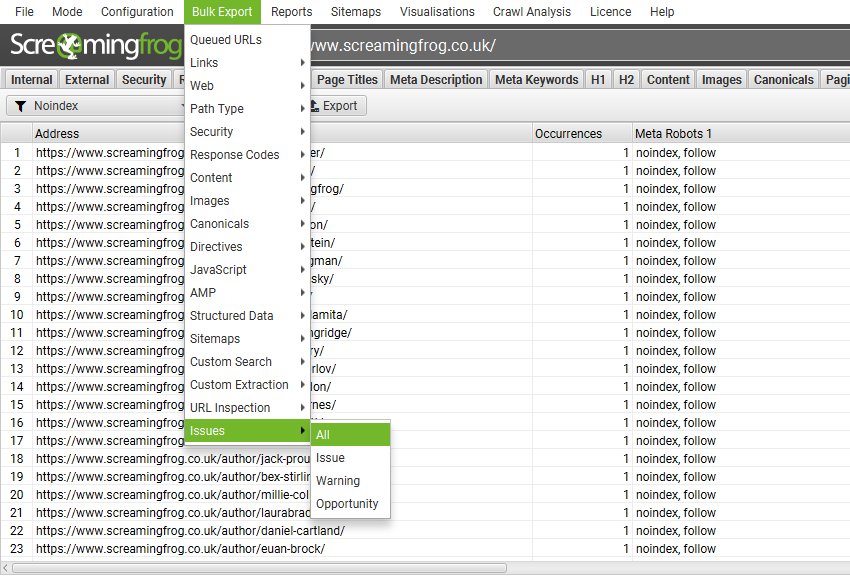

Directives

The directives tab shows data related to the meta robots tag, and the X-Robots-Tag in the HTTP Header. These robots directives can control how your content and URLs are displayed in search engines, such as Google.

The meta robots tag should be placed in the head of the document and an example of a ‘noindex’ meta tag looks like this in HTML:

<meta name="robots" content="noindex"/>

The same directive can be issued in the HTTP header using the X-Robots-Tag, which looks like this:

X-Robots-Tag: noindex

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Meta Robots 1/2 etc – Meta robots directives found on the URL. The SEO Spider will find all instances if there are multiple.

- X-Robots-Tag 1/2 etc – X-Robots-tag HTTP header directives for the URL. The SEO Spider will find all instances if there are multiple.

Filters

This tab includes the following filters.

- Index – This allows the page to be indexed. It’s unnecessary, as search engines will index URLs without it.

- Noindex – This instructs the search engines not to index the page. The page will still be crawled (to see the directive), but it will then be dropped from the index. URLs with a ‘noindex’ should be inspected carefully.

- Follow – This instructs any links on the page to be followed for crawling. It’s unnecessary, as search engines will follow them by default.

- Nofollow – This is a ‘hint’ which tells the search engines not to follow any links on the page for crawling. This is generally used by mistake in combination with ‘noindex’, when there is no need to include this directive. To crawl pages with a meta nofollow tag the configuration ‘Follow Internal Nofollow’ must be enabled under ‘Config > Spider’.

- None – This does not mean there are no directives in place. It means the meta tag ‘none’ is being used, which is the equivalent to “noindex, nofollow”. These URLs should be reviewed carefully to ensure they are being correctly kept out of the search engines indexes.

- NoArchive – This instructs Google not to show a cached link for a page in the search results.

- NoSnippet – This instructs Google not to show a text snippet or video preview from being shown in the search results.

- Max-Snippet – This value allows you to limit the text snippet length for this page to [number] characters in Google. Special values include – 0 for no snippet, or -1 to allow any snippet length.

- Max-Image-Preview – This value can limit the size of any image associated with this page in Google. Setting values can be “none”, “standard”, or “large”.

- Max-Video-Preview – This value can limit any video preview associated with this page to [number] seconds in Google. You can also specify 0 to allow only a still image, or -1 to allow any preview length.

- NoODP – This is an old meta tag that used to instruct Google not to use the Open Directory Project for its snippets. This can be removed.

- NoYDIR – This is an old meta tag that used to instruct Google not to use the Yahoo Directory for its snippets. This can be removed.

- NoImageIndex – This tells Google not to show the page as the referring page for an image in the Image search results. This has the effect of preventing all images on this page from being indexed in this page.

- NoTranslate – This value tells Google that you don’t want them to provide a translation for this page.

- Unavailable_After – This allows you to specify the exact time and date you want Google to stop showing the page in their search results.

- Refresh – This redirects the user to a new URL after a certain amount of time. We recommend reviewing meta refresh data within the response codes tab.

- Outside <head> – Pages with a meta robots that is outside of the head element in the HTML. The meta robots should be within the head element, or search engines may ignore it. Google will typically still recognise meta robots such as a ‘noindex’ directive, even outside of the head element, however this should not be relied upon.

In this tab we also display columns for meta refresh and canonicals. However, we recommend reviewing meta refresh data within the response codes tab and relevant filter, and canonicals within the canonicals tab.

hreflang

The hreflang tab includes details of hreflang annotations crawled by the SEO Spider, delivered by HTML link element, HTTP Header or XML Sitemap. The filters show common issues discovered for hreflang.

Hreflang is useful when you have multiple versions of a page for different languages or regions. It tells Google about these different variations and helps them show the most appropriate version of your page by language or region.

Hreflang link elements should be placed in the head of the document and looks like this in HTML:

<link rel="alternate" hreflang="en-gb" href="https://www.example.com" >

<link rel="alternate" hreflang="en-us" href="https://www.example.com/us/" >

‘Store Hreflang‘ and ‘Crawl Hreflang‘ options need to be enabled (under ‘Config > Spider’) for this tab and respective filters to be populated. To extract hreflang annotations from XML Sitemaps during a regular crawl ‘Crawl Linked XML Sitemaps‘ must be selected as well.

Columns

This tab includes the following columns.

- Address – The URL crawled.

- Title 1/2 etc – The page title element of the page.

- Occurrences – The number of hreflang discovered on a page.

- HTML hreflang 1/2 etc – The hreflang language and region code from any HTML link element on the page.

- HTML hreflang 1/2 URL etc – The hreflang URL from any HTML link element on the page.

- HTTP hreflang 1/2 etc – The hreflang language and region code from the HTTP Header.

- HTTP hreflang 1/2 URL etc – The hreflang URL from the HTTP Header.

- Sitemap hreflang 1/2 etc – The hreflang language and region code from the XML Sitemap. Please note, this only populates when crawling the XML Sitemap in list mode.

- Sitemap hreflang 1/2 URL etc – The hreflang URL from the XML Sitemap. Please note, this only populates when crawling the XML Sitemap in list mode.

Filters

This tab includes the following filters.

- Contains Hreflang – These are simply any URLs that have rel=”alternate” hreflang annotations from any implementation, whether link element, HTTP header or XML Sitemap.

- Non-200 Hreflang URLs – These are URLs contained within rel=”alternate” hreflang annotations that do not have a 200 response code, such as URLs blocked by robots.txt, no responses, 3XX (redirects), 4XX (client errors) or 5XX (server errors). Hreflang URLs must be crawlable and indexable and therefore non-200 URLs are treated as errors, and ignored by the search engines. The non-200 hreflang URLs can be seen in the lower window ‘URL Info’ pane with a ‘non-200’ confirmation status. They can be exported in bulk via the ‘Reports > Hreflang > Non-200 Hreflang URLs’ export.

- Unlinked Hreflang URLs – These are pages that contain one or more hreflang URLs that are only discoverable via its rel=”alternate” hreflang link annotations. Hreflang annotations do not pass PageRank like a traditional anchor tag, so this might be a sign of a problem with internal linking, or the URLs contained in the hreflang annotation. To find out exactly which hreflang URLs on these pages are unlinked, use the ‘Reports > Hreflang > Unlinked Hreflang URLs’ export.

- Missing Return Links – These are URLs with missing return links (or ‘return tags’ in Google Search Console) to them, from their alternate pages. Hreflang is reciprocal, so all alternate versions must confirm the relationship. When page X links to page Y using hreflang to specify it as it’s alternate page, page Y must have a return link. No return links means the hreflang annotations may be ignored or not interpreted correctly. The missing return links URLs can be seen in the lower window ‘URL Info’ pane with a ‘missing’ confirmation status. They can be exported in bulk via the ‘Reports > Hreflang > Missing Return Links’ export.

- Inconsistent Language & Region Return Links – This filter includes URLs with inconsistent language and regional return links to them. This is where a return link has a different language or regional value than the URL is referencing itself. The inconsistent language return URLs can be seen in the lower window ‘URL Info’ pane with an ‘Inconsistent’ confirmation status. They can be exported in bulk via the ‘Reports > Hreflang > Inconsistent Language Return Links’ export.

- Non-Canonical Return Links – URLs with non canonical hreflang return links. Hreflang should only include canonical versions of URLs. So this filter picks up return links that go to URLs that are not the canonical versions. The non canonical return URLs can be seen in the lower window ‘URL Info’ pane with a ‘Non Canonical’ confirmation status. They can be exported in bulk via the ‘Reports > Hreflang > Non Canonical Return Links’ export.

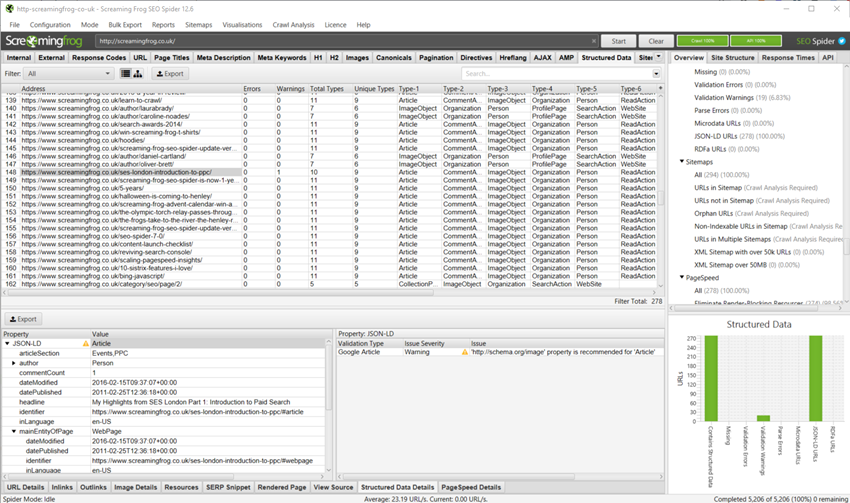

- Noindex Return Links – Return links which have a ‘noindex’ meta tag. All pages within a set should be indexable, and hence any return URLs with ‘noindex’ may result in the hreflang relationship being ignored. The noindex return links URLs can be seen in the lower window ‘URL Info’ pane with a ‘noindex’ confirmation status. They can be exported in bulk via the ‘Reports > Hreflang > Noindex Return Links’ export.